If False, the connection may be accessed in multiple threads If the database connection is used by a thread Or None to disable opening transactions implicitly.Ĭheck_same_thread ( bool) – If True (default), ProgrammingError will be raised Isolation_level ( str | None) – The isolation_level of the connection,Ĭontrolling whether and how transactions are implicitly opened.Ĭan be "DEFERRED" (default), "EXCLUSIVE" or "IMMEDIATE" Types cannot be detected for generated fields (for example max(data)),Įven when the detect_types parameter is set str will beīy default ( 0), type detection is disabled. Set it to any combination (using |, bitwise or) ofĬolumn names takes precedence over declared types if both flags are set. Using the converters registered with register_converter(). That table will be locked until the transaction is committed.ĭetect_types ( int) – Control whether and how data types notĪre looked up to be converted to Python types, If another connection opens a transaction to modify a table, Timeout ( float) – How many seconds the connection should wait before raisingĪn OperationalError when a table is locked. Pass ":memory:" to open a connection to a database that is Parametersĭatabase ( path-like object) – The path to the database file to be opened. connect ( database, timeout = 5.0, detect_types = 0, isolation_level = 'DEFERRED', check_same_thread = True, factory = sqlite3.Connection, cached_statements = 128, uri = False ) ¶

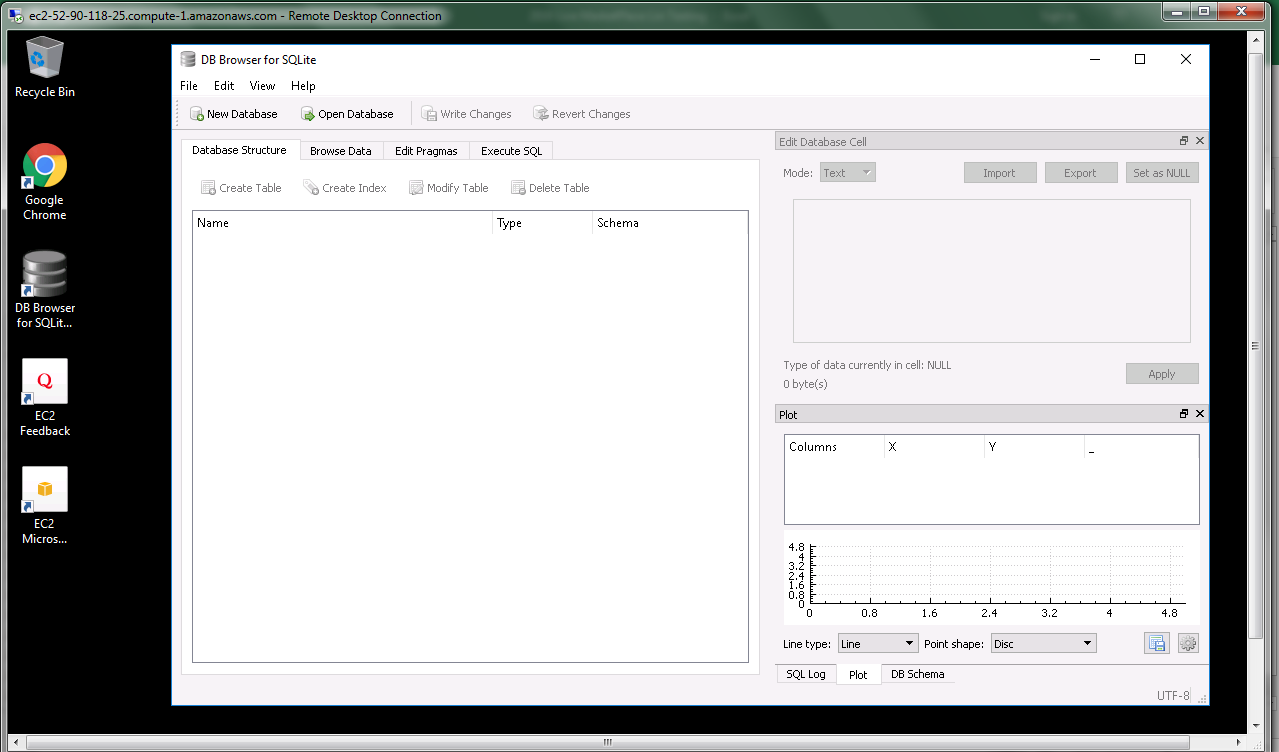

SQLITE BROWSER NOTRESPONDING HOW TO

How to use the connection context managerĮxplanation for in-depth background on transaction control. How to convert SQLite values to custom Python types How to adapt custom Python types to SQLite values Once this field is in view, the problems are as described initially.How to use placeholders to bind values in SQL queries While scrolling right, it scrolls fast till the field is encountered and then hangs/freezes for a couple of seconds and then loads and completes the scroll. When I scroll right towards the field with large text, that is when all the problems seem to occur. One interesting thing that I noticed is that when the field with large text is not in the viewable area of the browser (when I resize the browser and scroll away from this field), the browser is fast and CPU usage is under 5%. Took 3 seconds to open the Browse data tab. my original db contantly takes about 75-80 MB of memory.Took about 12 seconds (twice as much as bloat1) to open the Browse Data tab. The CPU usage raises from 0% initially upto about 22 - 26% (sometimes lesser) on similar circumstances as above. bloat1b contantly takes about 340MB of memory.Took about 6 seconds to open the Browse Data tab once the db was opened in the browser.

Only the CPU usage raises from 0% initially upto about 20% (sometimes lesser) when I scroll (up/down or left/right) in the browser or if I click on a menu item (say File) or if I switch from another application to the browser.

SQLITE BROWSER NOTRESPONDING FULL

Using select substr(a,1,40000) from a works, but only in the 'Execute SQL' tab - it would be handy if the 'browse data' tab could 'truncate' data, with the 'Edit Data Cell' pane obviously showing the full the memory usage is a bit strange! Was it with the bloat1, bloat1b or with the original one I shared? On my PC, Obviously, if you're storing 300 KB pictures in a database (as per this would explain the even longer delays. I just changed the database to 200,000 chars in each record, and its a lot slower. The problem isn't twice as bad with 200,000 characters in each field, but it is worse. This would point to it being a 'displaying the data' issue rather than 'DB4S can't cope with large blobs of data' issue. As the grid can only display X characters, would it be worth the grid only showing the first X characters of the field? Or would that have too much of a speed impact to be worth it?Ĭreating a SQL query without the long field (so 'select rowid from a') is speedy. It didn't make much difference having a long continuous line of text or text with spaces in it (thought the grid would prefer the latter). Shove 100,000 characters in a field, and DB4S struggles browsing data or viewing records via the 'Execute SQL' tab. Can confirm long strings in a field make DB4S cough.

0 kommentar(er)

0 kommentar(er)